This is a comprehensive guide to configure Databricks on Google Cloud Platform (GCP) that will help you work with multi-project service accounts. This article outlines the necessary steps and best practices to enable seamless and secure access to GCP resources from within a Databricks environment across multiple GCP projects. Additionally, you will also find instructions for setting up Databricks to facilitate this configuration.

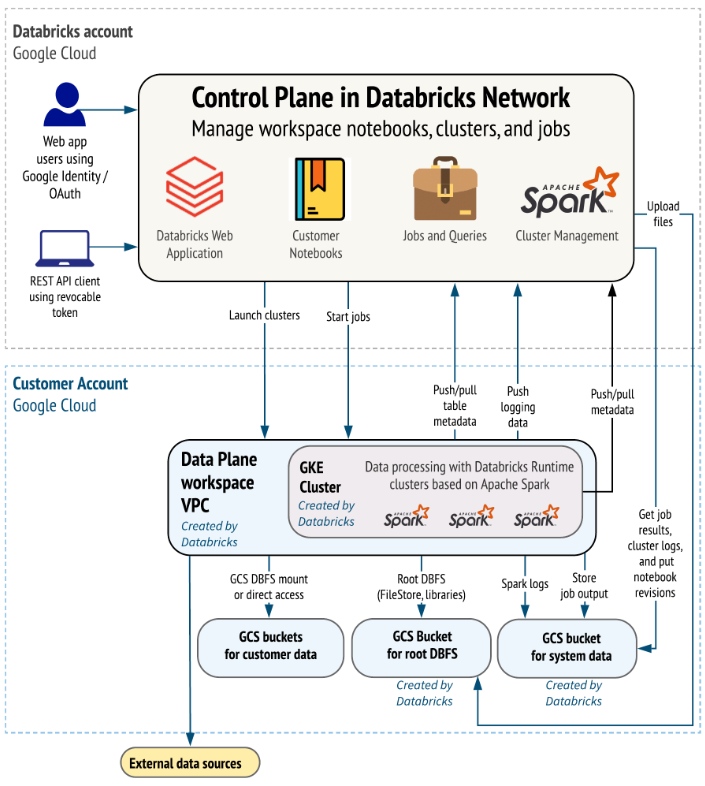

Databricks on Google Cloud leverages Google Cloud’s secure managed Kubernetes service and Google Kubernetes Engine (GKE) that support containerized deployments of Databricks in the cloud. You may use it to leverage high-performance infrastructure to execute workloads faster at lower costs and enable new Databricks workloads to be migrated to Google Cloud in a quick and secure way. On GCP it's very simple to enable the necessary services using a service account whether the services are in the same GCP project or across different GCP projects.

A Service Account in Google Cloud Platform (GCP) is a specific type of Google Account that represents a non-human user or entity, typically used for authentication and authorization when interacting with GCP services and resources. Service Accounts are often used by applications, virtual machines (VMs), containers, or other services to access GCP resources securely without any need of human intervention.

Here's a high-level overview of the steps to create and use a Service Account in GCP:

Use the GCP Console, Google Cloud command-line tool, or an API to create a new Service Account in your GCP project.

Specify the roles or permissions you want the Service Account to have within your project. These roles determine what actions the Service Account can perform.

Generate a key file (typically in JSON format) for the Service Account. This file contains the private key that the service account will use to authenticate itself.

In your application or service, use the Service Account credentials to authenticate and make authorized API requests to GCP resources.

Service Accounts play a crucial role in securing GCP environments, enabling automation, and managing access to cloud resources in a controlled and granular manner.

To create a workspace, you need a few Google permissions on your account.

iam.serviceAccounts.getIamPolicy

iam.serviceAccounts.setIamPolicy

While creating the workspace select your network type from the following:

Databricks-managed VPC

Customer-managed VPC

Create a service account with a name bearing the keyword databricks.

Provide the following information while creating the workspace:

Workspace name

Region

Google Cloud project ID

Network type

Configure the details about private GKE clusters.

Create a Service Account with a name bearing the keyword databricks, otherwise it will be considered a default service account. And if it's disabled then the cluster won't get up.

Select the ‘Kubernetes Engine Node Service Account Role’ rule for the new service account.

Select the Availability Zone on the cluster as ‘auto’, otherwise nodes will be created across different zones.

Google Service Account can be mentioned in the cluster where to access the storage.

The default role ‘Databricks Service IAM Role for Workspace’ should be enabled.

1. Create a Service Account for the Databricks cluster.

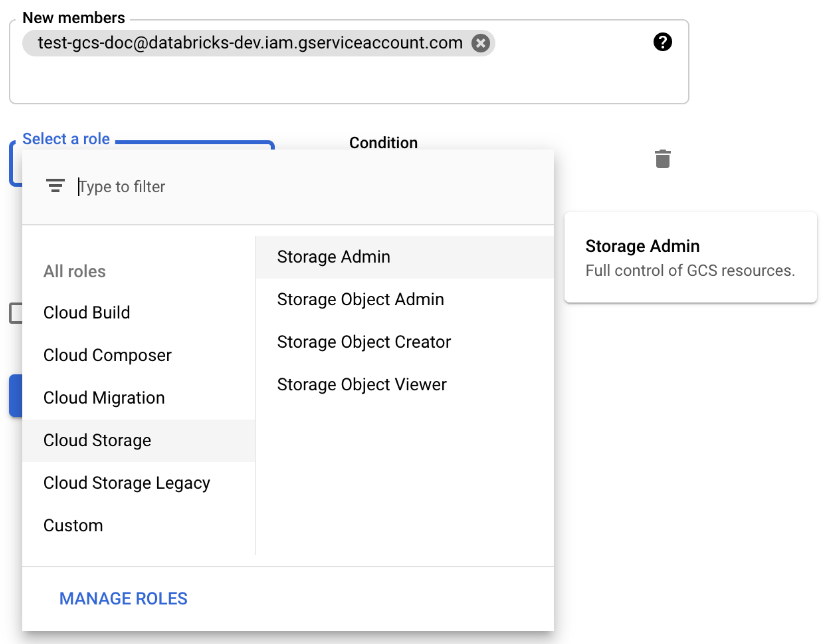

2. Create a Private Key to access the GCS bucket directly.

3. Provide Storage Admin permission to the service account on the bucket from the cloud storage roles.

4. Put the Service Account key in Databricks secrets.

databricks configure

databricks secrets create-scope --scope

databricks secrets list --scope

databricks secrets put --scope

databricks secrets put --scope

5. Configure a Databricks cluster.

spark.hadoop.google.cloud.auth.service.account.enable true

spark.hadoop.fs.gs.auth.service.account.email

spark.hadoop.fs.gs.project.id

spark.hadoop.fs.gs.auth.service.account.private.key {{secrets/scope/gsa_private_key}}

spark.hadoop.fs.gs.auth.service.account.private.key.id {{secrets/scope/gsa_private_key_id}}

6. Configure Google Service Account (Optional).

This is an optional step in configuration in place of configuring the Spark configs. You need to mention the email address of the GCP Service Account with whose identity this cluster will be launched. All workloads running on this cluster will have access to the underlying GCP services scoped to the permissions granted to this Service Account in GCP.

Sometimes there might be a need to communicate from one service to another service. This can be achieved using the Google Cloud Service Account. You can use the Service Accounts in GCP to access multiple projects within the same or different accounts and organizations.

Please follow the steps below:

1. Create a Service Account.

2. Grant the required permissions in the project release.

3. You may grant access to specific users to this Service Account.

4. You can also provide the required permissions for the other projects.

5. Use the Service Account key to access both the projects.

Databricks charges for Databricks usage in Databricks Units (DBUs). The number of DBUs a workload consumes varies based on a number of factors, including Databricks compute type (all-purpose or jobs) and the Google Cloud machine type.

Additional costs are incurred in your Google Cloud account:

Google Cloud charges an additional per-workspace cost for the GKE cluster that Databricks creates for Databricks infrastructure in your account. The cost for this GKE cluster is approximately $200/month, prorated to the days in the month that the GKE cluster runs.

The GKE cluster cost applies even if Databricks clusters are idle. To reduce this idle-time cost, Databricks deletes the GKE cluster in your account if no Databricks Runtime clusters are active for five days. Other resources, such as VPC and GCS buckets, remain unchanged. The next time a Databricks Runtime cluster starts, Databricks recreates the GKE cluster, which adds to the initial Databricks Runtime cluster launch time. To give you an example of how GKE cluster deletion reduces monthly costs, let’s consider that if you used a Databricks Runtime cluster on the first day of the month but never for the rest of the month - your GKE usage would be the five days before the idle timeout takes effect and nothing more, costing approximately $33 for the month.

Sign up with your email address to receive news and updates from InMobi Technology